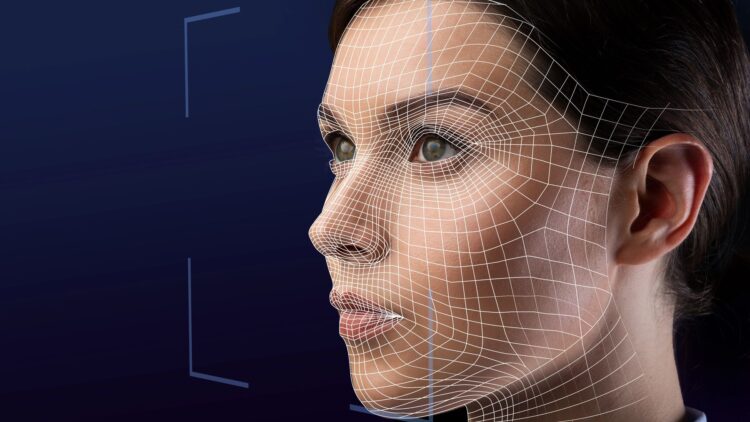

The rise and growth of AI and how quickly it is being consumed by people have caused some risk factors. While the tool has shown potential to solve problems on a global scale, there are those who take advantage of the good and utilise it for the bad, therefore leading to jeopardising the whole concept of AI. People may see and hear news from all around the world in a matter of seconds thanks to social media, smartphones, and continuous internet updates. This keeps us updated and connected most of the time. However, there are instances when what we see and hear is not genuine at all.

There is a need to use tools that detect fake information made through AI

Companies and organisations must use advanced tools to detect and stamp out misinformation and deepfake content to help counter growing risks of election interference and financial fraud, the United Nations’ International Telecommunication Union urged in a report on Friday. Deepfakes, such as AI-generated images and videos and audio that convincingly impersonate real people, pose mounting risks.

The ITU said in the report released at its “AI for Good Summit” in Geneva. The ITU called for robust standards to combat manipulated multimedia and recommended that content distributors such as social media platforms use digital verification tools to authenticate images and videos before sharing. This will stop all these unfair and fake videos and images from circulating and manipulating people.

Bilel Jamoussi, Chief of the Study Groups Department at the ITU’s Standardisation Bureau, noted that,

“Trust in social media has dropped significantly because people don’t know what’s true and what’s fake.”

Establishing these tools will help with user trust and security online

Combatting deepfakes was a top challenge due to Generative AI’s ability to fabricate realistic multimedia, Jamoussi said. Leonard Rosenthol of Adobe, a digital editing software leader that has been addressing deepfakes since 2019, underscored the importance of establishing the provenance of digital content to help users assess its trustworthiness.

These AI-generated images and videos have made innocent people fall into some scam ideas and initiatives. For instance, some people have taken videos and voices of prominent figures and generated words or videos of them saying something to try and lure their followers or subscribers into something that looks genuine but is a scam. Therefore, this issue is very sensitive.

AI will become stronger and better with time, and this global problem needs attention as soon as possible

Rosenthol also expressed,

“We need more of the places where users consume their content to show this information…When you are scrolling through your feeds, you want to know: ‘can I trust this image, this video…'”

Dr. Farzaneh Badiei, the founder of digital governance research firm Digital Medusa, stressed the importance of a global approach to the problem, given that there is currently no single international watchdog focusing on detecting manipulated material. The ITU is currently developing standards for watermarking videos, which make up 80% of internet traffic, to embed provenance data such as creator identity and timestamps.

Tomaz Levak, founder of Switzerland-based Umanitek, urged the private sector to proactively implement safety measures and educate users. “AI will only get more powerful, faster or smarter… We’ll need to upskill people to make sure that they are not victims of the systems,” he said. Right now, there is a dependency on experts to work on this issue because the more it is delayed, the worse it becomes, and we do not know what else will develop soon as a result of AI. Deepfakes tarnish reputations; therefore, the tools will push the narrative and give people authentic content.

GCN.com/Reuters.