News

Our News category at Global Current News provides fast, reliable coverage of breaking events and key developments worldwide, including in the United States. From political decisions and economic shifts to cultural milestones and scientific breakthroughs, our skilled journalists bring you the latest reports with precise, concise analysis. Stay informed and connected to the global conversation with up-to-the-minute updates.

New era for the blue planet: America and 140 other nations are finally coming together to save the deep sea

On January 17, 2026, the first legally binding international treaty to protect marine biodiversity on the high seas will come...

Read moreDetailsChina selects Shenzhen as host city for 2026 APEC summit

China's choice of Shenzhen as the host city for the APEC Leaders' Summit, an event to be held in 2026,...

Read moreDetailsUN General Assembly schedules mid-2026 vote to elect five new rotating Security Council members

The elections for new non-permanent members of the United Nations' Security Council are scheduled for mid-2026. The elections will be...

Read moreDetailsMounting climate alarms ahead of 2026 as Arctic warming accelerates and carbon limits near collapse

Experts are sounding the climate alarms as the remaining carbon budget to maintain warming below 1.5°C is reduced to only 130...

Read moreDetailsAnalysts warn of possible political unrest in 2026 across Bangladesh, Nepal, and Pakistan

Forecasts for 2026 suggest that Bangladesh, Nepal, and Pakistan could face a rise in political unrest. Analysts point to a...

Read moreDetailsGlobal powers agree to convene 2026 summit aimed at reinforcing humanitarian law in conflict zones

World leaders are set to hold a historic summit in January 2026 to strengthen respect for international humanitarian law (IHL),...

Read moreDetailsEmerging influenza variant strains hospitals across Europe and stresses public health capacity

This year, the first flu season to have collected data across the entire region is putting a strain on the...

Read moreDetailsTensions flare again along the Cambodia-Thailand border following renewed clashes and troop movements

On December 8, Thailand and Cambodia exchanged fire in many contested sectors. Most significantly the Preah Vihear and Oddar Meanchey...

Read moreDetailsCyclones Senyar and Ditwah drive deadly flooding across multiple parts of Southeast Asia

The overflow of Cyclones Senyar and Ditwah, multifaceted storms with rare nature and phenomena, triggered significant landslides and floods affecting...

Read moreDetailsItaly hails UNESCO decision to recognize Italian cuisine as part of world cultural heritage

A UNESCO listing in India (New Delhi) for Italy’s full body of cuisine at the 2023 20th session of the...

Read moreDetailsUN high seas treaty to enter into force in early 2026, ushering in new ocean governance era

The world is on the verge of watching the birth of a completely new era in the governance and protection...

Read moreDetailsWorld Bank trims East Asia-Pacific 2026 growth forecast to 4.3%

The World Bank announced in October that it had made a new economic projection, which generated comments about the pace...

Read moreDetailsU.S. secretary of state to tour Central Asia in 2026 amid push for deeper resource cooperation

During a reception at the State Department on November 5, where U.S. Secretary, Marco Rubio hosted the foreign ministers of...

Read moreDetailsPortugal’s constitutional court strikes down core provisions of the government’s new citizenship law

Portugal's nationality law has been amended numerous times since it was enacted in 1981. These amendments reflect the current political...

Read moreDetailsDemocratic Republic of the Congo declares end of Ebola outbreak after weeks with no new cases

After a series of weeks without reported cases of the Ebola virus, the Democratic Republic of Congo announced it had...

Read moreDetailsNationwide walkout disrupts Portugal as opposition to labor-law changes escalates

Proposed changes to labour laws have resulted in Portugal experiencing its first general strike in 12 years. Walkouts at millions...

Read moreDetailsTrump administration broadens U.S. travel restrictions, barring supporters from Senegal and Ivory Coast ahead of the 2026 World Cup

The United States, through an Executive Order, is expanding its previously announced Travel Ban in order to include two new...

Read moreDetailsSudan returns to the top of global humanitarian emergency rankings as conditions deteriorate

The International Rescue Committee (IRC) has added Sudan to its watchlist. The report, published on 16 December, shows the correlation...

Read moreDetailsIndia deepens diplomatic ties with Ethiopia as Prime Minister Narendra Modi visits Addis Ababa

Prime Minister Narendra Modi began a historic first visit to Ethiopia this week, marking the beginning of a new era...

Read moreDetailsIndia’s prime minister sets off on a three-country diplomatic tour covering Jordan, Ethiopia, and Oman

India's growing significance towards the West Asia and Africa regions impacts India's economy, energy, and strategy. A three-nation visit demonstrates...

Read moreDetailsSyria commemorates the first anniversary of the collapse of Bashar al-Assad’s government

It has been a year since Syria commemorated the collapse of Bashar al-Assad’s government, a major milestone after 14 years...

Read moreDetailsInternational condemnation follows deadly attack at a Jewish festival in Sydney’s Bondi area

An example of global concern and solidarity demonstrates support and concern from leaders and communities worldwide. Citizens and families from...

Read moreDetailsUnited Nations warns that rising global obesity and overweight trends are becoming a severe public health hazard

A health crisis of unprecedented dimensions is taking place across the world, the consequences of which are being felt in...

Read moreDetailsCongo and Rwanda accused of violating newly signed Washington peace accord within days of agreement

After the recent conflict between the Democratic Republic of the Congo (DRC) and Rwanda, the Washington Peace Accord signed between...

Read moreDetailsStarmer calls on European leaders to reform ECHR rules to stem the advance of far-right movements

UK Prime Minister Keir Starmer has asked other European leaders to update the European Convention on Human Rights (ECHR) as...

Read moreDetailsMexico authorizes higher tariffs on imports from China and other Asian economies

Trade tariffs that Mexico has started to implement are aimed at protecting the country’s manufacturing sector and gaining more control...

Read moreDetailsUganda set to receive up to $1.7 billion in U.S. healthcare funding under proposed Trump initiative

Under a newly bilateral partnership, Uganda is expected to receive $1.7 billion, as announced in December 2025, as part of...

Read moreDetailsChile votes in José Antonio Kast, marking a decisive shift toward the political right

Chile's newest political history will be shaped by José Antonio Kast of the Republican Party, and the country's latest dramatic...

Read moreDetailsPakistan unlocks a further $1.2 billion from the IMF as reform commitments move ahead

Pakistan recently secured its $1.2 billion funding from the IMF. The funding reflects the progress the country has made on...

Read moreDetailsEscalation in Yemen raises fears of civil war returning

Yemen is experiencing new fears of renewed civil conflict after a period of stagnant warfare. The Southern Transition Council (STC)...

Read moreDetailsZelensky signals willingness to hold elections if the U.S. can guarantee security conditions

Recently, Ukrainian President Volodymyr Zelensky announced that there is a possibility of a major change in Ukraine's wartime governance. Zelensky...

Read moreDetailsMilitary transport plane crashes in Sudan conflict, killing entire crew

The crisis in Sudan has worsened recently when a military transport aircraft, the Ilyushin Il-76 cargo plane, crashed while attempting...

Read moreDetailsAustralia implements the world’s first nationwide social media limits for minors

Australia has become the first country in the world to implement technology regulations at a national level by prohibiting the...

Read moreDetailsVenezuela’s aviation crisis deepens as mass flight suspensions force widespread passenger rerouting

Due to the U.S. airspace proclamation, airline flights have stopped operating from and to Venezuela. This has resulted in airlines...

Read moreDetailsRenewed violence in Yemen risks reopening full-scale civil conflict and destabilizing the wider Gulf

Yemen has started to calm down after a period of extreme difficulty in the country. This period, however, was accompanied...

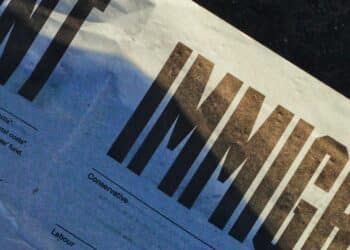

Read moreDetailsWorldwide poll shows crime, inflation, and immigration rank as top public worries heading into 2025

Crime and violence have reached the highest levels of concern globally since the first quarter of 2019, according to an...

Read moreDetailsSyria commemorates one year since the fall of Bashar al-Assad’s regime

December 8th marked one year since the fall of Bashar al-Assad's regime. With this anniversary, many emotions are stirred among...

Read moreDetailsMyanmar’s proposed December general election raises alarms over atrocious political repression

After Myanmar’s military declared the country’s first post-coup general elections to the public in May 2023, the Myanmar military has...

Read moreDetailsInternational Criminal Court shuts its Caracas office citing lack of cooperation and stalled proceedings

The International Criminal Court (ICC) has decided to close its office in Caracas, generating a wave of questions, especially about...

Read moreDetailsInternational Criminal Court shuts its Caracas office, citing lack of cooperation and stalled proceedings

The International Criminal Court (ICC) announced the closure of its technical office in Caracas, Venezuela, stating that the absence of...

Read moreDetailsDRC declares Ebola outbreak ended after 42 consecutive days without new infections

On December 1, 2025, the DRC Ministry of Public Health announced that the Ebola Virus Disease outbreak in the Kasai...

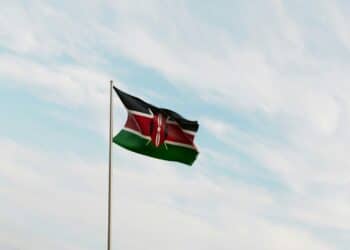

Read moreDetailsKenya secures landmark U.S.-backed healthcare financing, becoming first African nation to do so

Kenya has announced a historic health funding agreement with the support of the United States, sparking debate across the continent....

Read moreDetailsTanzanian authorities ban all December 9 demonstrations amid heightened post-election crackdown

The Tanzanian government has implemented a nationwide ban on all demonstrations planned for December 9. This date happens to fall...

Read moreDetailsUK government rolls out sweeping new measures to combat corruption nationwide

There will be no stone left unturned in the fight against corruption. In December 2025, the British government unveiled a...

Read moreDetailsBenin rocked by unsuccessful coup bid, heightening tensions across West Africa

Benin went through critical times when a group of military officers attempted to overthrow the government and announce, via state...

Read moreDetailsUN and humanitarian agencies unveil $23 billion plan to support 87 million people affected by conflict, climate shocks and crises in 2026

The United Nations and humanitarian aid partners have created a $23 billion plan to help people affected by conflict, climate...

Read moreDetailsUN Volunteers commemorates Global Volunteer Day 2025, noting 2.1 billion people participate in monthly volunteer work

This December 5th, UN Volunteers celebrates Global Volunteer Day 2025, a date established to celebrate the strength and solidarity of...

Read moreDetailsRed Sea International Film Festival launches in Jeddah, showcasing films from around the world

The fifth Red Sea International Film Festival takes place December 4 to 13, 2023, on the Jeddah Corniche, featuring outdoor...

Read moreDetailsVenezuela urges OPEC members to push back against mounting U.S. pressure

Venezuela has reached out to OPEC to address what it has called “threatening and illegal activity from the United States.”...

Read moreDetailsCyclone Ditwah devastates Sri Lanka, unleashing the worst floods in decades and causing massive loss of life

Sri Lanka is going through one of the most difficult moments in its history. The country was hit by Cyclone...

Read moreDetails